BERTimbau

5.1. BERTimbau#

Despite the existence of multilingual BERT models trained on multiple languages, there was an effort to train monolingual BERT models on single languages. BERTimbau is a BERT model pretrained specifically for the Portuguese language.

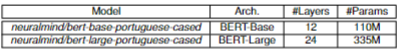

Both BERT-Base (\(12\) layers, \(768\) hidden dimension, \(12\) attention heads, and \(110\)M parameters) and BERT-Large (\(24\) layers, \(1024\) hidden dimension, \(16\) attention heads and \(330\)M parameters) variants were trained with Brazilian Web as Corpus (BrWaC) [WWIV18], a large Portuguese corpus, for \(1,000,000\) steps, using a learning rate of \(1e−4\). The maximum sentence length was set to \(S = 512\) tokens

BrWaC corpus contained over \(2.68\) billions tokens retrieved from across \(3.53\) million documents, providing a well diverse dataset. They utilised the body of the HTML, ignoring the titles and possible footnotes. The researches proceeded to remove the HTML tags and fix possible “mojibakes”, which is a type of text corruption that occurs when strings are decoded using the incorrect character encoding, producing a processed corpus with \(17.5\) GB of raw text.

The pretraining stage was identical to BERT. BERTimbau was trained MLM and NSP tasks with the same techniques probabilities. Each pretraining example is generated by concatenating two sequences of tokens \(x = (x1,...,xn)\) and \(y =(y1,...,ym)\) separated by special [CLS] and [SEP] tokens as follows:

For each corpus sentence \(x\), in 50% of the time \(y\) is chosen as to form a contiguous piece of text, and 50% of the time \(y\) is a random sentence from a completely different document from the corpus.

15% of the tokens of every example \(x\) and \(y\) is replaced by 1 of 3 options. Each token can be replaced with a special [MASK] token with 80% probability. With 10% probability, it is replaced with a random token from the vocabulary or, with the remaining 10% probability, the original token remains unchanged.

BERTimbau serves as the foundation for our language model, Legal-BERTimbau. Even though BERTimbau is already a language model adapted to the Portuguese language, it was necessary to develop a model for our legal domain. This fine-tuning stage is essential, since we needed to assure that the model producing the embeddings could properly understand the records.